|

Alejandro Sztrajman |

Publications

|

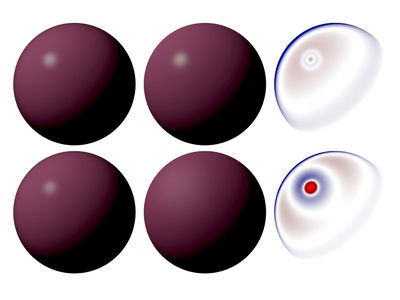

M³ashy: Multi-Modal Material Synthesis via Hyperdiffusion Chenliang Zhou, Zheyuan Hu, Alejandro Sztrajman, Yancheng Cai, Yaru Liu, Cengiz Oztireli. Proceedings of the AAAI Conference on Artificial Intelligence, 2026. Keywords: Abstract 🢒High-quality material synthesis is essential for replicating complex surface properties to create realistic scenes. Despite advances in the generation of material appearance based on analytic models, the synthesis of real-world measured BRDFs remains largely unexplored. To address this challenge, we propose M³ashy, a novel multi-modal material synthesis framework based on hyperdiffusion. M³ashy enables high-quality reconstruction of complex real-world materials by leveraging neural fields as a compact continuous representation of BRDFs. Furthermore, our multi-modal conditional hyperdiffusion model allows for flexible material synthesis conditioned on material type, natural language descriptions, or reference images, providing greater user control over material generation. To support future research, we contribute two new material datasets and introduce two BRDF distributional metrics for more rigorous evaluation. We demonstrate the effectiveness of M³ashy through extensive experiments, including a novel statistics-based constrained synthesis, which enables the generation of materials of desired categories. |

|

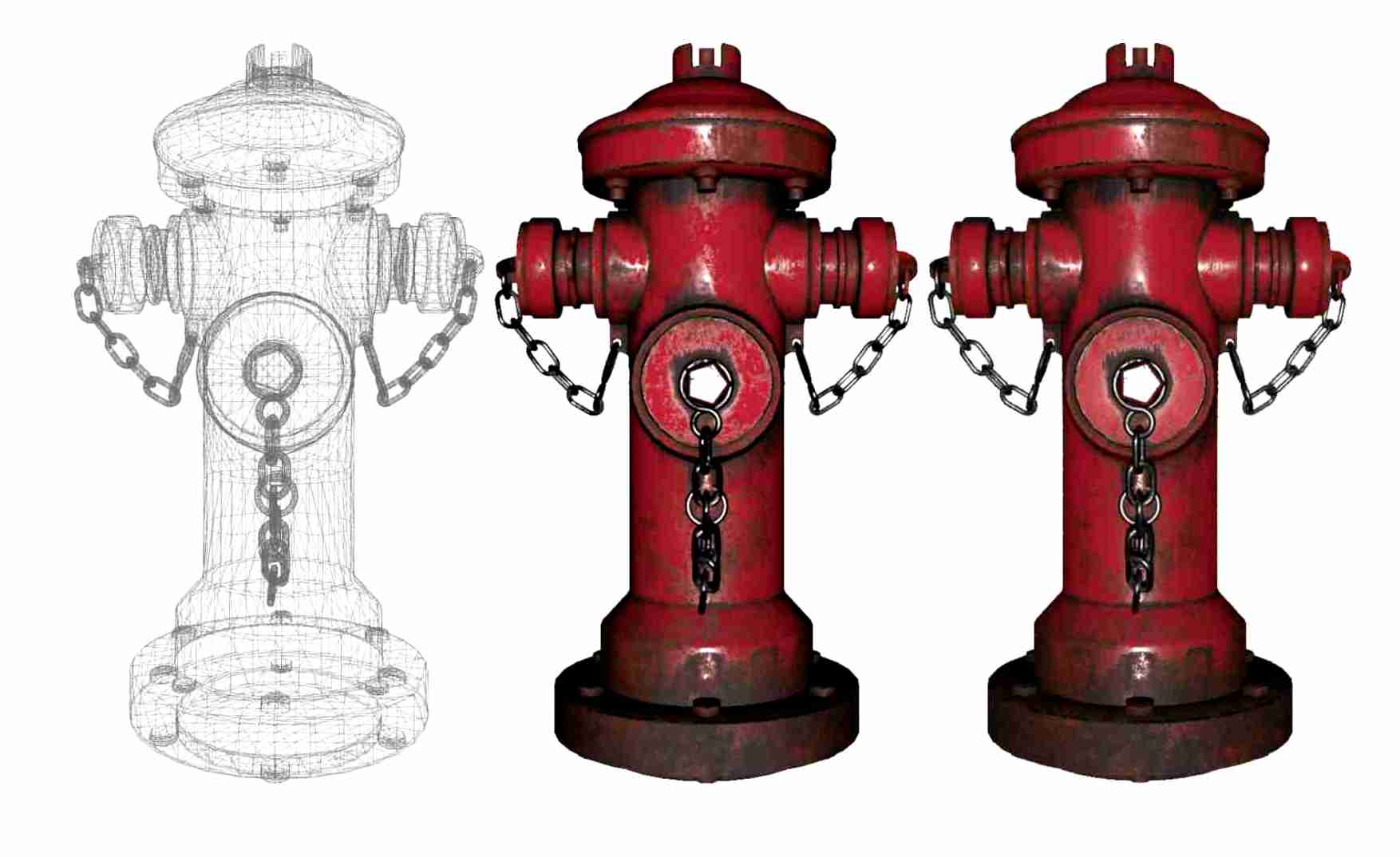

Physically Based Neural Bidirectional Reflectance Distribution Function Chenliang Zhou, Alejandro Sztrajman, Gilles Rainer, Fangcheng Zhong, Fazilet Gokbudak, Zhilin Guo, Weihao Xia, Rafal Mantiuk, Cengiz Oztireli. Computer Graphics Forum (CGF), 2026. Keywords: Abstract 🢒We introduce the physically based neural bidirectional reflectance distribution function (PBNBRDF), a novel, continuous representation for material appearance based on neural fields. Our model accurately reconstructs real-world materials while uniquely enforcing physical properties for realistic BRDFs, specifically Helmholtz reciprocity via reparametrization and energy passivity via efficient analytical integration. We conduct a systematic analysis demonstrating the benefits of adhering to these physical laws on the visual quality of reconstructed materials. Additionally, we enhance the color accuracy of neural BRDFs by introducing chromaticity enforcement supervising the norms of RGB channels. Through both qualitative and quantitative experiments on multiple databases of measured real-world BRDFs, we show that adhering to these physical constraints enables neural fields to more faithfully and stably represent the original data and achieve higher rendering quality. |

|

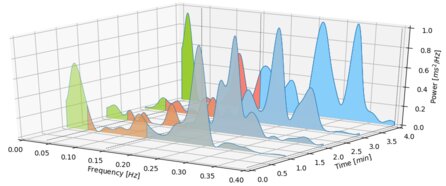

LSCD: Lomb–Scargle Conditioned Diffusion for Irregular Time series Imputation Alejandro Sztrajman*, Elizabeth Fons*, Yousef El-Laham, Luciana Ferrer, Svitlana Vyetrenko, Manuela Veloso. International Conference on Machine Learning (ICML), 2025. Keywords: Abstract 🢒Time series imputation with missing or irregularly sampled data is a persistent challenge in machine learning. Most frequency-domain methods rely on the Fast Fourier Transform (FFT), which assumes uniform sampling, therefore requiring interpolation or imputation prior to frequency estimation. We propose a novel diffusion-based imputation approach (LSCD) that leverages Lomb–Scargle periodograms to robustly handle missing and irregular samples without requiring interpolation or imputation in the frequency domain. Our method trains a score-based diffusion model conditioned on the entire signal spectrum, enabling direct usage of irregularly spaced observations. Experiments on synthetic and real-world benchmarks demonstrate that our method recovers missing data more accurately than purely time-domain baselines, while simultaneously producing consistent frequency estimates. Crucially, our framework paves the way for broader adoption of Lomb–Scargle methods in machine learning tasks involving incomplete or irregular data. |

|

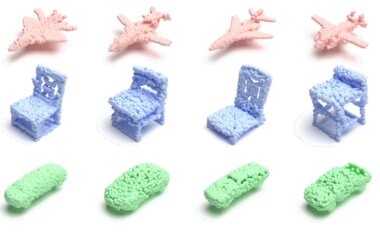

FrePolad: Frequency-Rectified Point Latent Diffusion for Point Cloud Generation Chenliang Zhou, Fangcheng Zhong, Param Hanji, Zhilin Guo, Kyle Fogarty, Alejandro Sztrajman, Hongyun Gao, Cengiz Oztireli. European Conference on Computer Vision (ECCV), 2024. Keywords: Abstract 🢒We propose FrePolad: frequency-rectified point latent diffusion, a point cloud generation pipeline integrating a variational autoencoder (VAE) with a denoising diffusion probabilistic model (DDPM) for the latent distribution. FrePolad simultaneously achieves high quality, diversity, and flexibility in point cloud cardinality for generation tasks while maintaining high computational efficiency. The improvement in generation quality and diversity is achieved through (1) a novel frequency rectification via spherical harmonics designed to retain high-frequency content while learning the point cloud distribution; and (2) a latent DDPM to learn the regularized yet complex latent distribution. In addition, FrePolad supports variable point cloud cardinality by formulating the sampling of points as conditional distributions over a latent shape distribution. Finally, the low-dimensional latent space encoded by the VAE contributes to FrePolad's fast and scalable sampling. Our quantitative and qualitative results demonstrate FrePolad's state-of-the-art performance in terms of quality, diversity, and computational efficiency. |

|

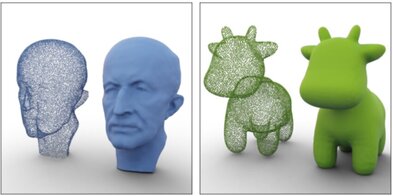

Hypernetworks for Generalizable BRDF Estimation Fazilet Gokbudak, Alejandro Sztrajman, Chenliang Zhou, Fangcheng Zhong, Rafal Mantiuk, Cengiz Oztireli. European Conference on Computer Vision (ECCV), 2024. Keywords: Abstract 🢒In this paper, we introduce a technique to estimate measured BRDFs from a sparse set of samples. Our approach offers accurate BRDF reconstructions that are generalizable to new materials. This opens the door to BRDF reconstructions from a variety of data sources. The success of our approach relies on the ability of hypernetworks to generate a robust representation of BRDFs and a set encoder that allows us to feed inputs of different sizes to the architecture. The set encoder and the hypernetwork also enable the compression of densely sampled BRDFs. We evaluate our technique both qualitatively and quantitatively on the well-known MERL dataset of 100 isotropic materials. Our approach accurately 1) estimates the BRDFs of unseen materials even for an extremely sparse sampling, 2) compresses the measured BRDFs into very small embeddings, e.g., 7D. |

|

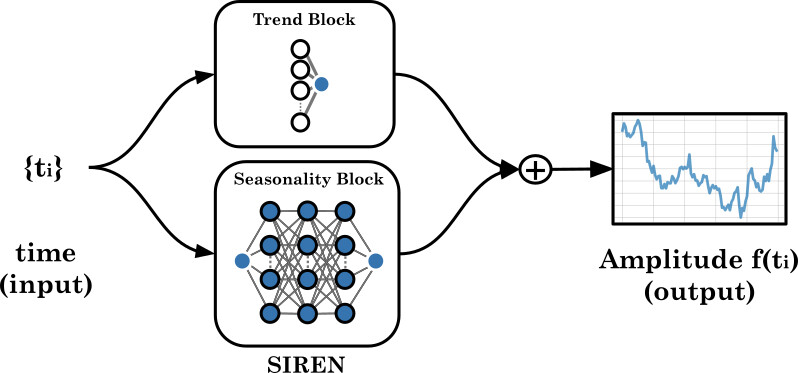

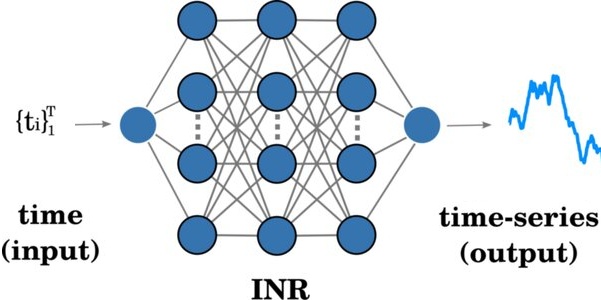

iHyperTime: Interpretable Time Series Generation with Implicit Neural Representations Elizabeth Fons, Alejandro Sztrajman, Yousef El-Laham, Andrea Coletta, Alexandros Iosifidis, Svitlana Vyetrenko. Transactions on Machine Learning Research (TMLR), 2024. Keywords: Abstract 🢒Implicit neural representations (INRs) have emerged as a powerful tool that provides an accurate and resolution-independent encoding of data. Their robustness as general approximators has been shown across diverse data modalities, such as images, video, audio, and 3D scenes. However, little attention has been given to leveraging these architectures for time series data. Addressing this gap, we propose an approach for time series generation based on two novel architectures: TSNet, an INR network for interpretable trend-seasonality time series representation, and iHyperTime, a hypernetwork architecture that leverages TSNet for time series generalization and synthesis. Through evaluations of fidelity and usefulness metrics, we demonstrate that iHyperTime outperforms current state-of-the-art methods in challenging scenarios that involve long or irregularly sampled time series, while performing on par on regularly sampled data. Furthermore, we showcase iHyperTime fast training speed, comparable to the fastest existing methods for short sequences and significantly superior for longer ones. Finally, we empirically validate the quality of the model's unsupervised trend-seasonality decomposition by comparing against the well-established STL method. |

|

HDR Image Deglaring via MTF Inversion with Enhanced Low-Frequency Characterisation Alejandro Sztrajman, Hongyun Gao, Rafal Mantiuk, London Imaging Meeting (LIM), 2024. Keywords: Abstract 🢒The Modulation Transfer Function (MTF) characterises an imaging system’s ability to reproduce different spatial frequencies. Its Accurate estimation is essential for effective image restoration and deglaring. Traditional methods struggle to capture low-frequency components, which affects the effective reconstruction of large-scale image structures. This work proposes a novel approach to HDR image deglaring that improves the characterisation of low-frequency components in the MTF. We capture a disc-shaped light source and optimise a parametric MTF model to reproduce the observed light spread pattern, offering a robust solution for image deglaring. |

|

Neural Fields with Hard Constraints of Arbitrary Differential Order Fangcheng Zhong, Kyle Fogarty, Param Hanji, Tianhao Wu, Alejandro Sztrajman, Andrew Spielberg, Andrea Tagliasacchi, Petra Bosilj, Cengiz Oztireli. Conference on Neural Information Processing Systems (NeurIPS), 2023. Keywords: Abstract 🢒While deep learning techniques have become extremely popular for solving a broad range of optimization problems, methods to enforce hard constraints during optimization, particularly on deep neural networks, remain underdeveloped. Inspired by the rich literature on meshless interpolation and its extension to spectral collocation methods in scientific computing, we develop a series of approaches for enforcing hard constraints on neural fields, which we refer to as Constrained Neural Fields (CNF). The constraints can be specified as a linear operator applied to the neural field and its derivatives. We also design specific model representations and training strategies for problems where standard models may encounter difficulties, such as conditioning of the system, memory consumption, and capacity of the network when being constrained. Our approaches are demonstrated in a wide range of real-world applications. Additionally, we develop a framework that enables highly efficient model and constraint specification, which can be readily applied to any downstream task where hard constraints need to be explicitly satisfied during optimization. |

|

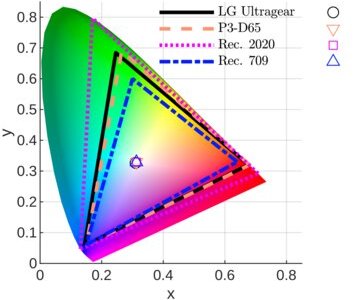

Color Calibration Methods for OLED Displays Maliha Ashraf, Alejandro Sztrajman, Dounia Hammou, Rafal Mantiuk. Electronic Imaging, 2023. Keywords: Abstract 🢒Accurate color reproduction on a display requires an inverse display model, mapping colorimetric values (e.g. CIE XYZ) into RGB values driving the display. To create such a model, we collected a large dataset of display color measurements for a high refresh-rate 4-primary OLED display. We demonstrated that, unlike traditional LCD displays, multi-primary OLED display color responses are non-additive and non-linear for some colors. We tested the performances of different regression methods: polynomial regression, look-up tables, multi-layer perceptrons, and others. The best-performing models were additionally validated on newly measured (unseen) test colors. We found that the performances of several variations of 4th-degree polynomial models were comparable to the look-up table and machine-learning-based models while being less resource-intensive. |

|

HyperTime: Implicit Neural Representations for Time-Series Elizabeth Fons, Alejandro Sztrajman, Yousef El-Laham, Alexandros Iosifidis, Svitlana Vyetrenko. NeurIPS SyntheticData4ML, 2022. Keywords: Abstract 🢒Implicit neural representations (INRs) have recently emerged as a powerful tool that provides an accurate and resolution-independent encoding of data. Their robustness as general approximators has been shown in a wide variety of data sources, with applications on image, sound, and 3D scene representation. However, little attention has been given to leveraging these architectures for the representation and analysis of time series data. In this paper, we analyze the representation of time series using INRs, comparing different activation functions in terms of reconstruction accuracy and training convergence speed. Secondly, we propose a hypernetwork architecture that leverages INRs to learn a compressed latent representation of an entire time series dataset. We introduce an FFT-based loss to guide training so that all frequencies are preserved in the time series. We show that this network can be used to encode time series as INRs, and their embeddings can be interpolated to generate new time series from existing ones. We evaluate our generative method by using it for data augmentation, and show that it is competitive against current state-of-the-art approaches for augmentation of time series. |

|

Neural BRDF Representation and Importance Sampling

(Wiley Top Cited Paper) Alejandro Sztrajman, Gilles Rainer, Tobias Ritschel, Tim Weyrich. Computer Graphics Forum (CGF), 2021 (Oral Presentation at EGSR 2022). Keywords: Abstract 🢒Controlled capture of real-world material appearance yields tabulated sets of highly realistic reflectance data. In practice, however, its high memory footprint requires compressing into a representation that can be used efficiently in rendering while remaining faithful to the original. Previous works in appearance encoding often prioritised one of these requirements at the expense of the other, by either applying high-fidelity array compression strategies not suited for efficient queries during rendering, or by fitting a compact analytic model that lacks expressiveness. We present a compact neural network-based representation of BRDF data that combines high-accuracy reconstruction with efficient practical rendering via built-in interpolation of reflectance. We encode BRDFs as lightweight networks, and propose a training scheme with adaptive angular sampling, critical for the accurate reconstruction of specular highlights. Additionally, we propose a novel approach to make our representation amenable to importance sampling: rather than inverting the trained networks, we learn an embedding that can be mapped to parameters of an analytic BRDF for which importance sampling is known. We evaluate encoding results on isotropic and anisotropic BRDFs from multiple real-world datasets, and importance sampling performance for isotropic BRDFs mapped to two different analytic models. |

|

Fast Blue Noise Generation via Unsupervised Learning *Alejandro Sztrajman, *Daniele Giunchi, Anthony Steed. International Joint Conference on Neural Networks (IJCNN), 2022 (Oral Presentation). Keywords: Abstract 🢒Blue noise is known for its uniformity in the spatial domain, avoiding the appearance of structures such as voids and clusters. Because of this characteristic, it has been adopted in a wide range of visual computing applications, such as image dithering, rendering and visualisation. This has motivated the development of a variety of generative methods for blue noise, with different trade-offs in terms of accuracy and computational performance. We propose a novel unsupervised learning approach that leverages a neural network architecture to generate blue noise masks with high accuracy and real-time performance, starting from a white noise input. We train our model by combining three unsupervised losses that work by conditioning the Fourier spectrum and intensity histogram of noise masks predicted by the network. We evaluate our method by leveraging the generated noise for two applications: grayscale blue noise masks for image dithering, and blue noise samples for Monte Carlo integration. |

|

Machine Learning Applications in Appearance Modelling Alejandro Sztrajman. University College London, 2022 (PhD Thesis).

Reviewers: Profs.

Lourdes Agapito and

Diego Gutierrez.

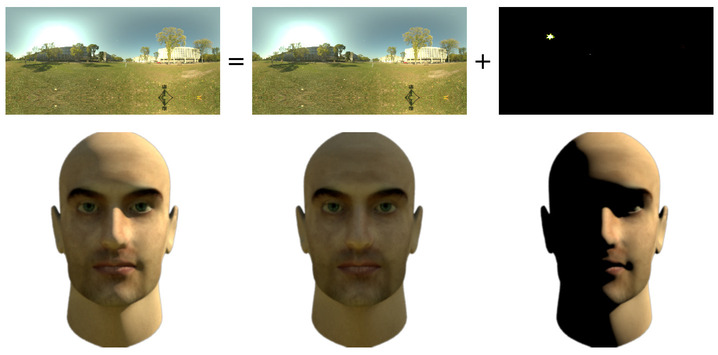

Abstract 🢒In this thesis, we address multiple applications of machine learning in appearance modelling. We do so by leveraging data-driven approaches, guided through the use of image-based error metrics, to generate new representations of material appearance and scene illumination. We first address the interchange of material appearance between different analytic representations, through an image-based optimisation of BRDF model parameters. We analyse the method in terms of stability with respect to variations of the BRDF parameters, and show that it can be used for material interchange between different renderers and workflows, without the need to access shader implementations. We extend our method to enable the remapping of spatially-varying materials, by presenting two regression schemes that allow us to learn the transformation of parameters between models and apply it to texture maps at fast rates. Next, we centre on the efficient representation and rendering of measured material appearance. We develop a neural-based BRDF representation that provides high-quality reconstruction with low storage and competitive evaluation times, comparable with analytic models. Our method compares favourably against other representations in terms of reconstruction accuracy, and we show that it can be also used to encode anisotropic materials. In addition, we generate a unified encoding of real-world materials via a meta-learning autoencoder architecture guided by a differential rendering loss. This enables the generation of new realistic materials by interpolation of embeddings, and the fast estimation of material properties. We show that this can be leveraged for efficient rendering through importance sampling, by predicting the parameters of an invertible analytic BRDF model. Finally, we design a hybrid representation for high-dynamic-range illumination that combines a convolutional autoencoder-based encoding for low-intensity light, and a parametric model for high intensity. Our model provides a flexible compact encoding for environment maps, while also preserving an accurate reconstruction of the high-intensity component, appropriate for rendering purposes. We utilise our light encodings in a second convolutional neural network trained for light prediction from single outdoor face portrait at interactive rates, with potential applications for real-time light prediction and 3D object insertion. |

|

Mixing Modalities of 3D Sketching and Speech for Interactive Model Retrieval in Virtual Reality Daniele Giunchi, Alejandro Sztrajman, Stuart James, Anthony Steed. ACM International Conference on Interactive Media Experiences (IMX), New York, USA, Jun 2021 (Oral Presentation). Keywords: |

|

High-Dynamic-Range Lighting Estimation from Face Portraits Alejandro Sztrajman, Alexandros Neophytou, Tim Weyrich, Eric Sommerlade. International Conference on 3D Vision (3DV), Fukoka, Japan, Nov 2020 (Oral Presentation). Keywords: |

|

Image-based remapping of spatially-varying material appearance Alejandro Sztrajman, Jaroslav Krivanek, Alexander Wilkie, Tim Weyrich. Journal of Computer Graphics Techniques (JCGT), 8(4), pp. 1-30, 2019. Keywords: |

|

Image-based remapping of material appearance Alejandro Sztrajman, Jaroslav Krivanek, Alexander Wilkie, Tim Weyrich. Eurographics Workshop on Material Appearance Modeling (MAM), Helsinki, Finland, Jun 2017 (Oral Presentation). Keywords: |

|

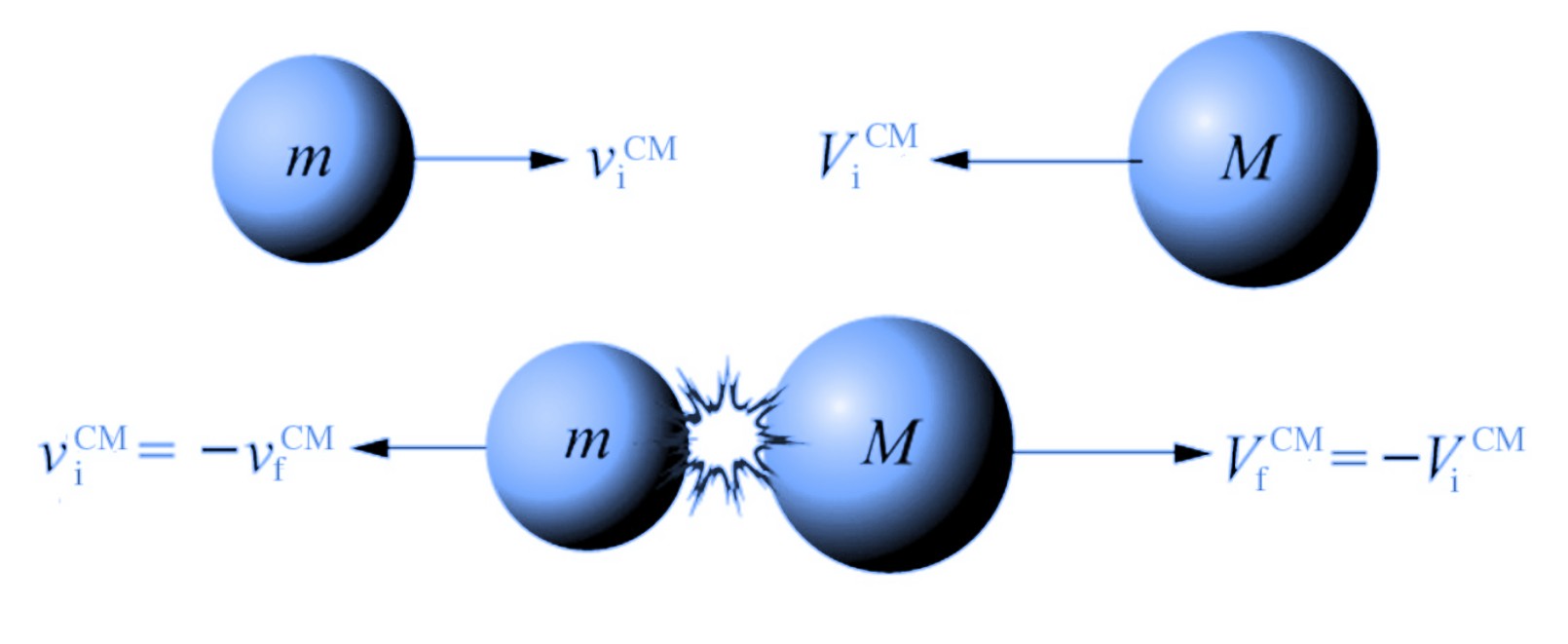

An Easy Way to One-Dimensional Elastic Collisions Jorge Sztrajman, Alejandro Sztrajman. The Physics Teacher (TPT) 55 (3), pp. 164-165. Keywords: |

|

Elementary Electromagnetism Juan G. Roederer. Buenos Aires University Press, Buenos Aires (2015).

I coordinated the editorial team

behind the undergraduate textbook by

Prof. Juan Roederer.

The book was first published in

2015.

A second edition was released in

2018,

and a digital edition followed in

2020.

|

Patents

|

Method and System for Generation of Interpretable Time Series with Implicit Neural Representations Elizabeth Fons, Svitlana Vyetrenko, Yousef El-Laham, Alejandro Sztrajman, Alexandros Iosifidis. US Patent US20240104358A1 (Pending, 2024) |

|

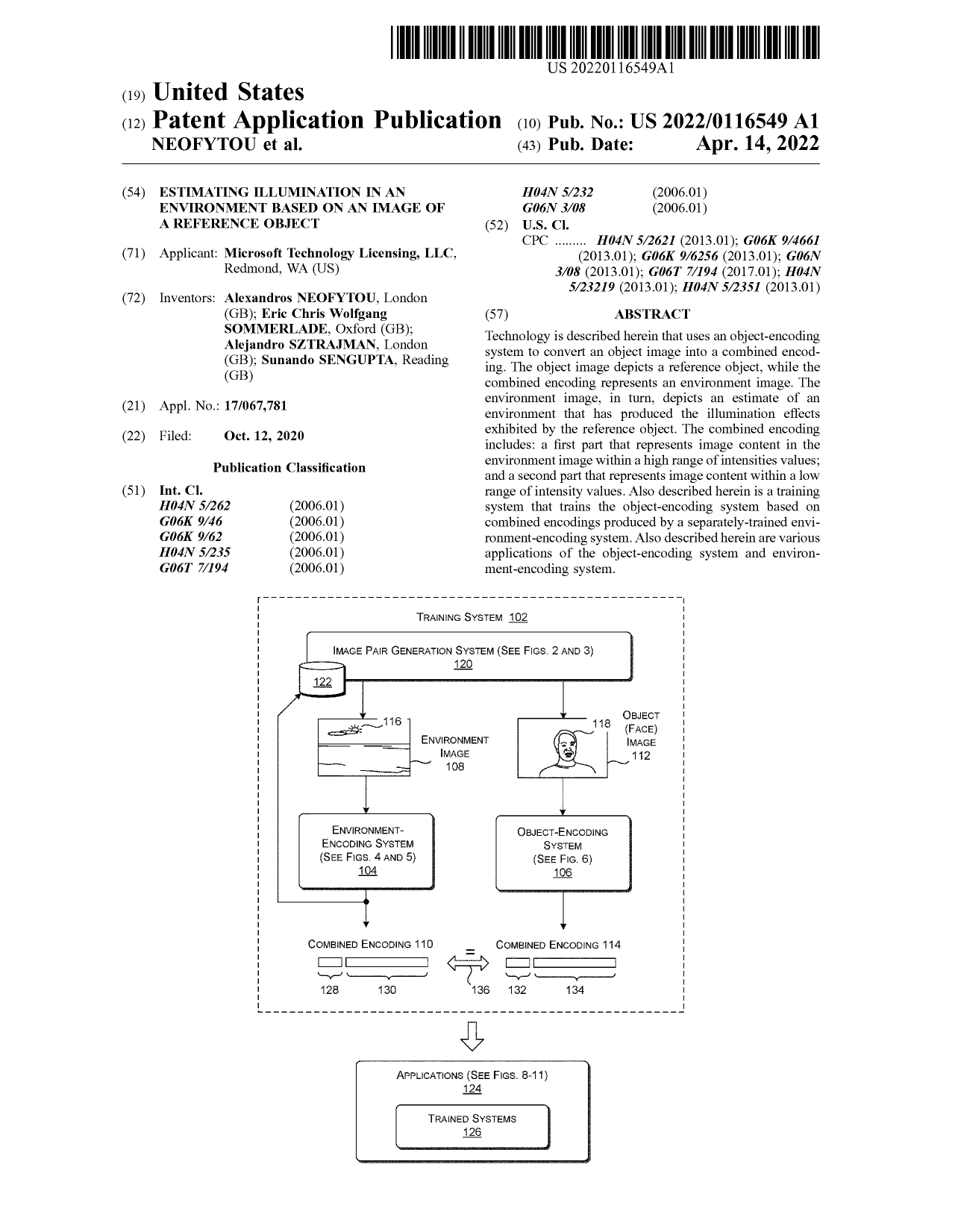

Estimating Illumination in an Environment Based on an Image of a Reference Object Alexandros Neophytou, Eric Sommerlade, Alejandro Sztrajman, Sunando Sengupta. US Patent US11330196B2 (2022). |

Talks

|

Relightable 3D Gaussian Splatting University of Cambridge, 19 Dec 2023. |

|

Neural Radiance Fields Homerton College, University of Cambridge, 14 Aug 2023 | Cambridge Summer Course. |

|

Neural Fields for Data Representation and Generation Brown University, 17 Oct 2022. |

|

High-Dynamic-Range Lighting Estimation from Face Portraits Virtual, 27 Nov 2020 | International Conference on 3D Vision. |

|

CNN-based Face Relighting Microsoft, Seattle, USA (virtual), 12 Mar 2020. |

|

Capture and Editing of Material Appearance ETH, Zurich, Switzerland. 5 Feb 2018. |

|

Introduction to Convolutional Neural Networks IST, Vienna, Austria. 14 Nov 2017. |

|

|

Image-based Remapping of Material Appearance Helsinki, Finland, 18 Jun 2017 | Eurographics Workshop on Material Appearance Modelling. |

Teaching

| 2024 | Introduction to Graphics (Invited Lecturer) | University of Cambridge | |

| 2023 | Advanced Graphics and Image Processing (Invited Lecturer) | University of Cambridge | |

| 2023 | Introduction to Graphics | University of Cambridge | |

| 2019 | Advanced Deep Learning and Reinforcement Learning | UCL | |

| 2018 | Research Methods and Reading | UCL | |

| 2017 | Scientific Programming in Python  |

UCL | |

| 2016 | Robotics Programming | UCL | |

| 2016 | Principles of Programming | UCL |