Mixing Modalities of 3D Sketching and Speech for Interactive Model Retrieval in Virtual Reality

-

1Department of Computer Science, University College London, United Kingdom.

2Visual Geometry and Modelling Lab (VGM), Istituto Italiano di Tecnologia, Italy.

ACM International Conference on Interactive Media Experiences, 2021.

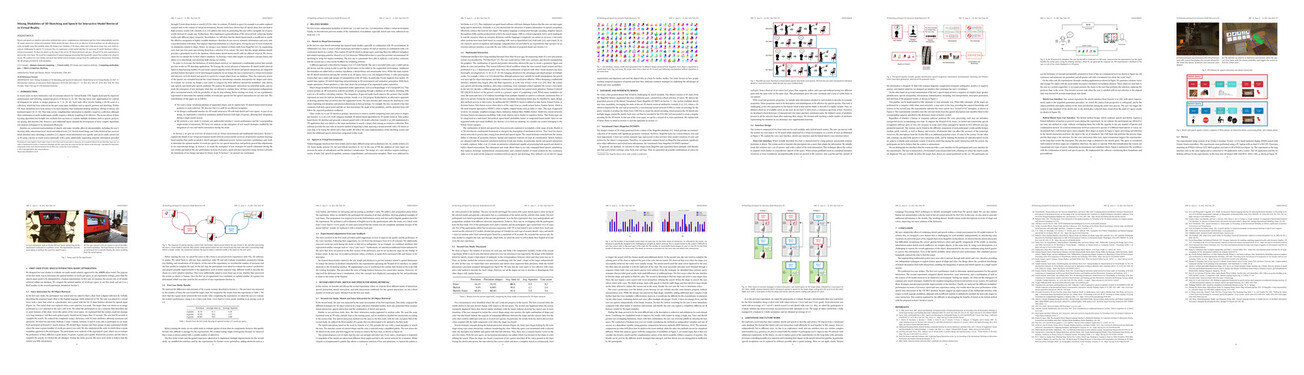

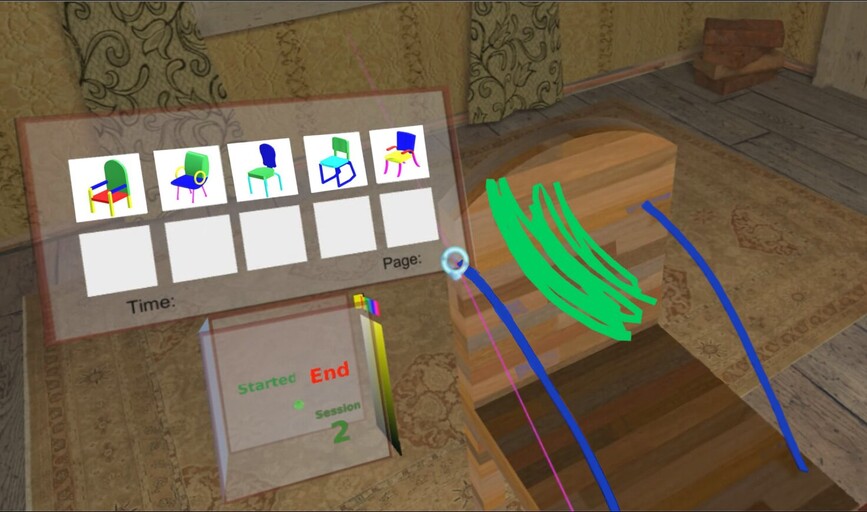

Sketch and speech are intuitive interaction methods that convey complementary information and have been independently used for 3D model retrieval in virtual environments. While sketch has been shown to be an effective retrieval method, not all collections are easily navigable using this modality alone. We design a new database of 3D chairs where each of the pieces (arms, legs, seat, back) are colored, challenging for sketch. To overcome this, we implement a multi-modal interface for querying 3D model databases within a virtual environment. We base the sketch on the state-of-the-art for 3D Sketch Retrieval and use a Wizard-of-Oz style experiment to process the voice input. We avoid the complexities of natural language processing that frequently require fine-tuning to be robust to accent. We conduct two user studies and show that hybrid search strategies emerge from the combination of interactions, fostering the advantages provided by both modalities.

- Paper

PDF 39 MB - Paper (lowres)

PDF 6.3 MB - Supplemental

PDF 7.6 MB

Dataset

- chairs_database.zip (313 MB)

-

features_dictionary.xlsx (0.3 MB)

- segmented_chairs.unitypackage (98 MB)

Paper

Bibtex

x

@inproceedings{giunchi2021imx, author = {Giunchi, Daniele and Sztrajman, Alejandro and James, Stuart and Steed, Anthony}, title = {Mixing Modalities of 3D Sketching and Speech for Interactive Model Retrieval in Virtual Reality}, year = {2021}, month = {June}, address = {New York, NY, USA}, booktitle = {ACM International Conference on Interactive Media Experiences}, numpages = {17}, location = {New York, NY, USA}, series = {IMX'21}}